Understanding Build Parallelism with llama.cpp

When building large C++ projects like llama.cpp, compilation time can significantly impact development workflows. Modern systems with many CPU cores promise faster builds through parallelization, but Amdahl's Law suggests there are fundamental limits to these speedups. Let's explore this through real-world build metrics.

The Experiment

Using a 24-core/48-thread AMD Threadripper system with 128GB RAM and fast SSD storage, we tested llama.cpp compilation with varying levels of parallelism. Here are the raw build times with different -j flags:

make -j 12 GGML_CUDA=1 880.15s user 58.56s system 875% cpu 1:47.27 total

make -j 24 GGML_CUDA=1 914.84s user 59.86s system 1318% cpu 1:13.90 total

make -j 48 GGML_CUDA=1 1138.14s user 80.67s system 1896% cpu 1:04.28 total

make -j 60 GGML_CUDA=1 1140.60s user 75.55s system 1916% cpu 1:03.46 total

make -j 72 GGML_CUDA=1 1150.55s user 76.05s system 1908% cpu 1:04.25 total

make -j 96 GGML_CUDA=1 1150.95s user 76.56s system 1946% cpu 1:03.07 total

Analyzing the Results

Looking at these numbers reveals several interesting patterns:

- Initial Scaling: Moving from 12 to 24 jobs reduces build time from 107s to 74s

- Sweet Spot: The optimal point appears around 48 jobs, matching our thread count

- Diminishing Returns: Additional jobs beyond 48 show minimal improvement

- CPU Utilization: Increases from 875% to 1946%, but doesn't translate to proportional speedup

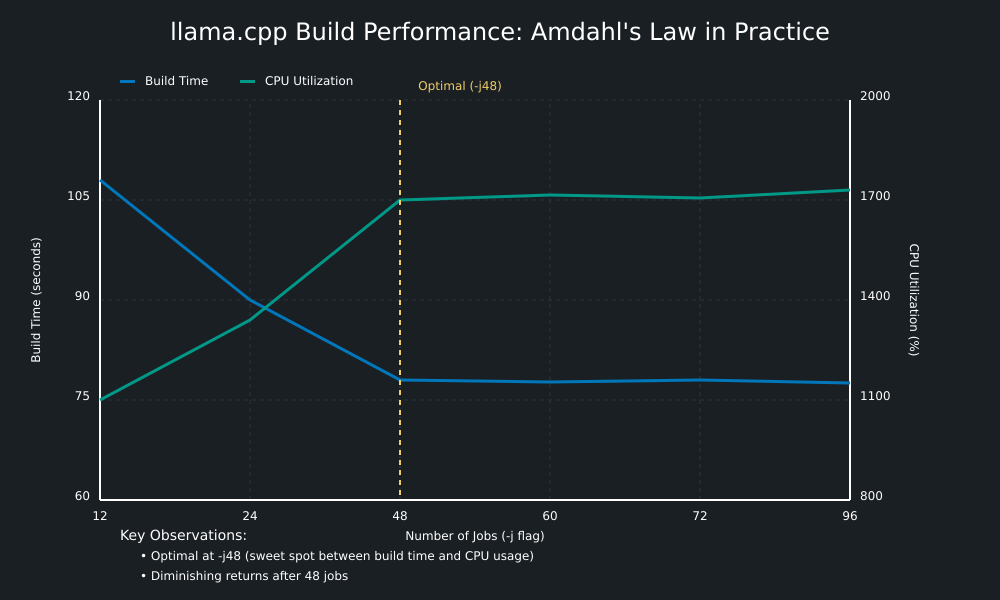

Visualizing the Data

To better understand these patterns, let's look at a visualization of the data:

The graph shows:

- Compile time (blue line) dropping rapidly initially, then plateauing

- CPU utilization (green line) continuing to rise

- Optimal point at 48 jobs (yellow reference line)

Amdahl's Law in Practice

This performance pattern perfectly illustrates Amdahl's Law, which states that the potential speedup of a program using parallel computing is limited by the portion of the program that can't be parallelized. In C++ compilation:

-

Parallelizable Parts:

- Individual source file compilation

- Some preprocessing tasks

- Some linking operations

-

Serial Bottlenecks:

- File I/O operations

- Final linking stages

- Dependency resolution

Practical Implications

For development workflows, these findings suggest:

- Start with jobs matching your thread count (

-j48in this case) - Monitor both build time and system responsiveness

- Consider backing off slightly for better system usability

- Remember that more parallelism isn't always better

Configuration Recommendations

Based on our findings:

- For builds where system responsiveness matters:

-j48 - For maximum throughput in CI/CD:

-j60 - For lighter development loads:

-j24

Conclusions

Amdahl's Law isn't just theoretical - it has practical implications for daily development tasks. Understanding these limits helps set realistic expectations and optimize build configurations appropriately.

The next time someone suggests "just add more jobs" to speed up compilation, you'll understand why that might not help beyond a certain point!

Want to experiment with your own build metrics? Try timing your builds with different -j values and graphing the results. The patterns might surprise you!