Benchmarking AWS Lambda: Python vs. Rust for Data Engineering Workloads

Benchmarking AWS Lambda: Python vs. Rust for Data Engineering Workloads

In this blog post, we'll explore how to benchmark and compare the performance of Python and Rust Lambda functions on AWS. We'll focus on a computationally intensive task—calculating the Fibonacci sequence recursively—and analyze the results to understand the impact of language choice, memory allocation, and optimization. Additionally, we'll estimate costs for hypothetical Fortune 500 data engineering workloads to highlight the potential cost savings of using Rust over Python.

Do you want to learn AWS Advanced AI Engineering?

Production LLM architecture patterns using Rust, AWS, and Bedrock.

Check out our course!

Introduction

AWS Lambda is a popular serverless computing service that allows you to run code without managing servers. However, the choice of programming language and optimization techniques can significantly impact performance and cost. In this post, we'll:

- Deploy Python and Rust Lambda functions.

- Benchmark their performance for a computationally intensive task.

- Analyze the results and estimate costs for large-scale workloads.

Setup

Prerequisites

Before diving in, ensure you have the following installed:

- AWS CLI: Configured with credentials.

- Python: For local testing and development.

- Rust: For local testing and development.

- jq: For JSON parsing in Bash scripts.

Installation

- Clone the repository:

git clone https://codeberg.org/noahgift/AWS-Gen-AI.git

cd AWS-Gen-AI/python-vs-rust/rust-benchmark

- Make the scripts executable:

chmod +x benchmark.sh update-memory.sh

Lambda Functions

Python Lambda Function

The Python Lambda function calculates the Fibonacci sequence recursively. Here's the code:

from time import perf_counter

import json

def fibonacci(n: int) -> int:

if n <= 1:

return n

return fibonacci(n - 1) + fibonacci(n - 2)

def handler(event, context):

start = perf_counter()

result = fibonacci(event.get('n', 40))

return {

'statusCode': 200,

'body': json.dumps({

'result': result,

'duration_ms': (perf_counter() - start) * 1000

})

}

Rust Lambda Function

The Rust Lambda function also calculates the Fibonacci sequence recursively. Here's the code:

use lambda_runtime::{handler_fn, Context, Error};

use serde_json::{json, Value};

fn fibonacci(n: u32) -> u64 {

if n <= 1 {

return n as u64;

}

fibonacci(n - 1) + fibonacci(n - 2)

}

async fn handler(event: Value, _: Context) -> Result<Value, Error> {

let n = event["n"].as_u64().unwrap_or(40) as u32;

let start = std::time::Instant::now();

let result = fibonacci(n);

let duration_ms = start.elapsed().as_millis();

Ok(json!({

"statusCode": 200,

"body": {

"result": result,

"duration_ms": duration_ms

}

}))

}

#[tokio::main]

async fn main() -> Result<(), Error> {

lambda_runtime::run(handler_fn(handler)).await?;

Ok(())

}

Benchmarking

Benchmark Script

The benchmark.sh script automates the process of benchmarking the Lambda functions. It:

- Validates the Lambda configurations.

- Invokes each function multiple times (default: 10 iterations).

- Measures and averages the execution times.

Usage

./benchmark.sh [--payload <payload>] [--iterations <iterations>]

Examples

- Default payload (

{"n": 40}) and 10 iterations:

./benchmark.sh

- Custom payload (

{"n": 28}) and 5 iterations:

./benchmark.sh --payload '{"n": 28}' --iterations 5

Memory Configuration

The update-memory.sh script updates the memory allocation for both Lambda functions. It defaults to 3,000 MB but allows for custom memory sizes.

Usage

./update-memory.sh [--memory <memory>]

Examples

- Default memory (3,000 MB):

./update-memory.sh

- Custom memory (1,792 MB):

./update-memory.sh --memory 1792

Results

Sample Output

Validating Lambda configurations...

Validation for FibonacciFunction:

Architecture: arm64

Memory Size: 3000 MB

Runtime: python3.9

Timeout: 10 seconds

Validation for rust-benchmark:

Architecture: arm64

Memory Size: 3000 MB

Runtime: provided.al2023

Timeout: 10 seconds

Benchmarking Python Lambda function...

Invocation 1: 10449 ms

Invocation 2: 10459 ms

Invocation 3: 10466 ms

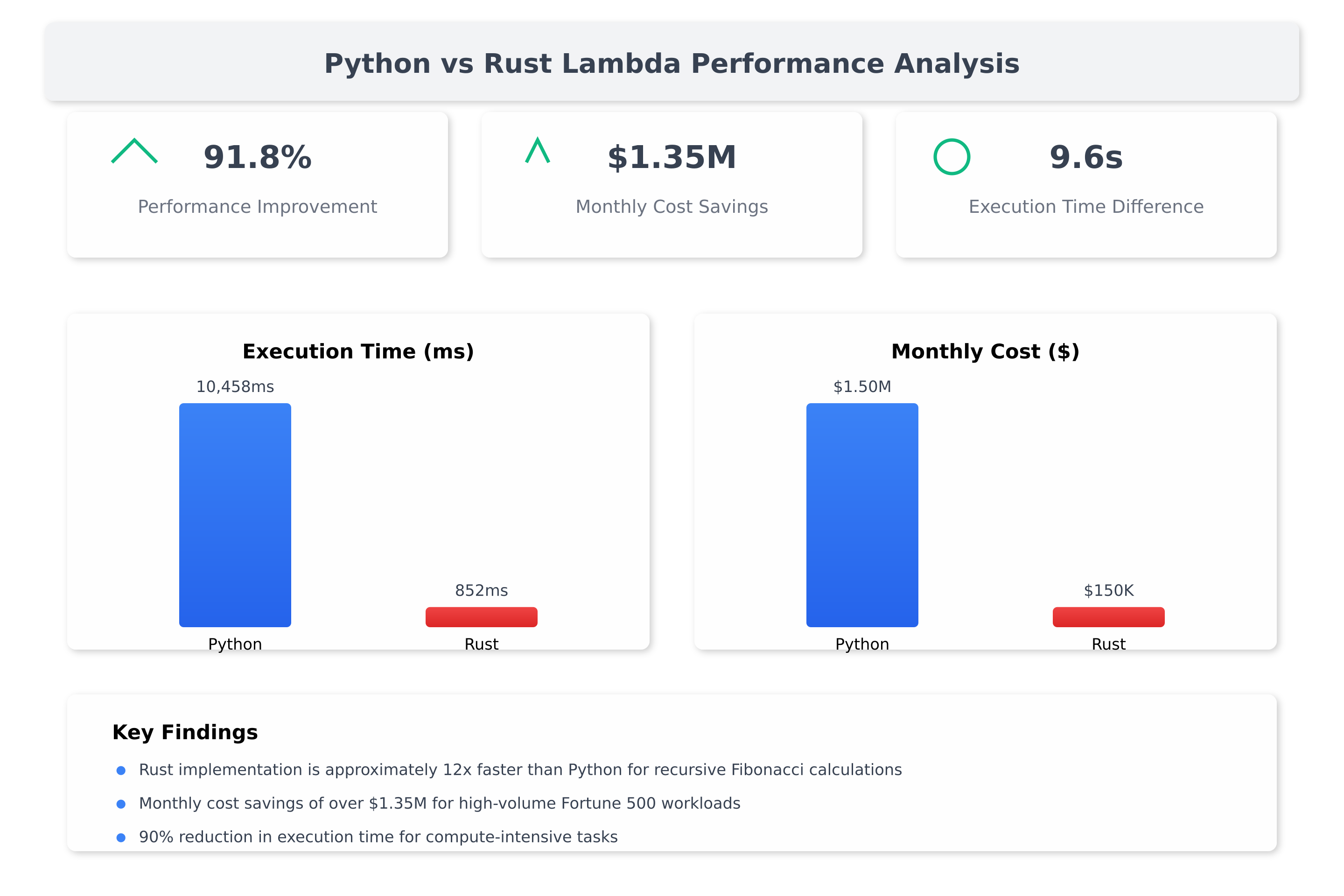

Average execution time for FibonacciFunction: 10458 ms

Benchmarking Rust Lambda function...

Invocation 1: 864 ms

Invocation 2: 849 ms

Invocation 3: 845 ms

Average execution time for rust-benchmark: 852 ms

Key Observations

- Python: Significantly slower due to its interpreted nature and the inefficiency of the recursive Fibonacci algorithm.

- Rust: Much faster due to its compiled nature and lower-level optimizations.

- Memory Impact: Increasing memory allocation improves performance by providing more CPU power.

Cost Analysis

Hypothetical Fortune 500 Workload

Assumptions

- Daily Invocations: 100 million invocations per day.

- Monthly Invocations: 3 billion invocations per month (30 days).

- Memory Allocation: 3,000 MB (3 GB).

- Execution Time:

- Python: 10 seconds per invocation.

- Rust: 1 second per invocation.

Cost Calculation

- Number of Requests:

Cost = (3,000,000,000 / 1,000,000) × $0.20 = $600

- Execution Time:

- Python: 90 billion GB-seconds → $1,500,300

- Rust: 9 billion GB-seconds → $150,030

- Total Cost:

- Python: $1,500,900 per month

- Rust: $150,630 per month

Cost Savings

Savings = $1,500,900 - $150,630 = $1,350,270 per month

Optimization

Python

- Use memoization to reduce the time complexity of the Fibonacci algorithm:

from functools import lru_cache

@lru_cache(maxsize=None)

def fibonacci(n: int) -> int:

if n <= 1:

return n

return fibonacci(n - 1) + fibonacci(n - 2)

Rust

- Use an iterative approach to calculate Fibonacci:

fn fibonacci(n: u32) -> u64 {

let mut a = 0;

let mut b = 1;

for _ in 0..n {

let temp = a;

a = b;

b = temp + b;

}

a

}

Best Practices

- Optimize Algorithms: Use efficient algorithms to reduce execution time.

- Adjust Memory: Increase memory allocation to improve CPU power.

- Use Provisioned Concurrency: Reduce cold start latency for performance-critical applications.

- Monitor Logs: Use CloudWatch logs to debug and optimize Lambda functions.

Conclusion

This project demonstrates how to benchmark and compare the performance of Python and Rust Lambda functions on AWS. By adjusting memory allocation and optimizing the Fibonacci algorithm, you can achieve significant performance improvements. The provided scripts automate the process, making it easy to test and analyze different configurations.

For Fortune 500 data engineering workloads, switching from Python to Rust can result in significant cost savings (up to 90% in this example). By optimizing algorithms, memory allocation, and invocation patterns, organizations can further reduce costs and improve performance.

License

This project is licensed under the MIT License.

Tags: AWS Lambda, Python, Rust, Benchmarking, Cost Analysis, Data Engineering Repository: AWS-Gen-AI/python-vs-rust/rust-benchmark

Recommended Courses

Based on this article's content, here are some courses that might interest you:

-

AWS Advanced AI Engineering (1 week)

Production LLM architecture patterns using Rust, AWS, and Bedrock. -

Rust-Powered AWS Serverless (4 weeks)

Learn to develop serverless applications on AWS using Rust and AWS Lambda. Master the fundamentals of serverless architecture while building practical applications and understanding performance optimizations. -

Enterprise AI Operations with AWS (2 weeks)

Master enterprise AI operations with AWS services -

Rust Data Engineering (4 weeks)

Master data engineering principles using Rust's powerful ecosystem and tools. Learn to build efficient, secure, and scalable data processing systems while leveraging cloud services and machine learning capabilities. -

Natural Language AI with Bedrock (1 week)

Get started with Natural Language Processing using Amazon Bedrock in this introductory course focused on building basic NLP applications. Learn the fundamentals of text processing pipelines and how to leverage Bedrock's core features while following AWS best practices.

Learn more at Pragmatic AI Labs