Deep Dive: Deploying Open Source LLMs on AWS - From Research Models to Production Systems

Table of Contents

Deep Dive: Deploying Open Source LLMs on AWS - From Research Models to Production Systems

The landscape of Large Language Models is rapidly evolving beyond commercial API services. While solutions like OpenAI's GPT models and Anthropic's Claude have dominated the market, a new frontier is emerging: production-grade open source LLM deployment. Pragmatic AI Labs' new course "Open Source LLMs on AWS" provides a comprehensive roadmap for organizations looking to take control of their AI infrastructure.

Do you want to learn AWS Advanced AI Engineering?

Production LLM architecture patterns using Rust, AWS, and Bedrock.

Check out our course!

The Rise of Open Source LLMs

Noah Gift, the course instructor, draws a compelling parallel between the current LLM landscape and the evolution of operating systems. Just as Linux eventually displaced proprietary Unix systems like Solaris through the "cost of free," we're witnessing a similar transformation in AI. Organizations are increasingly seeking alternatives to commercial APIs for several critical reasons:

- Complete data sovereignty and privacy control

- Predictable, fixed infrastructure costs

- Ability to customize and optimize for specific use cases

- Freedom to deploy on-premise or in preferred cloud environments

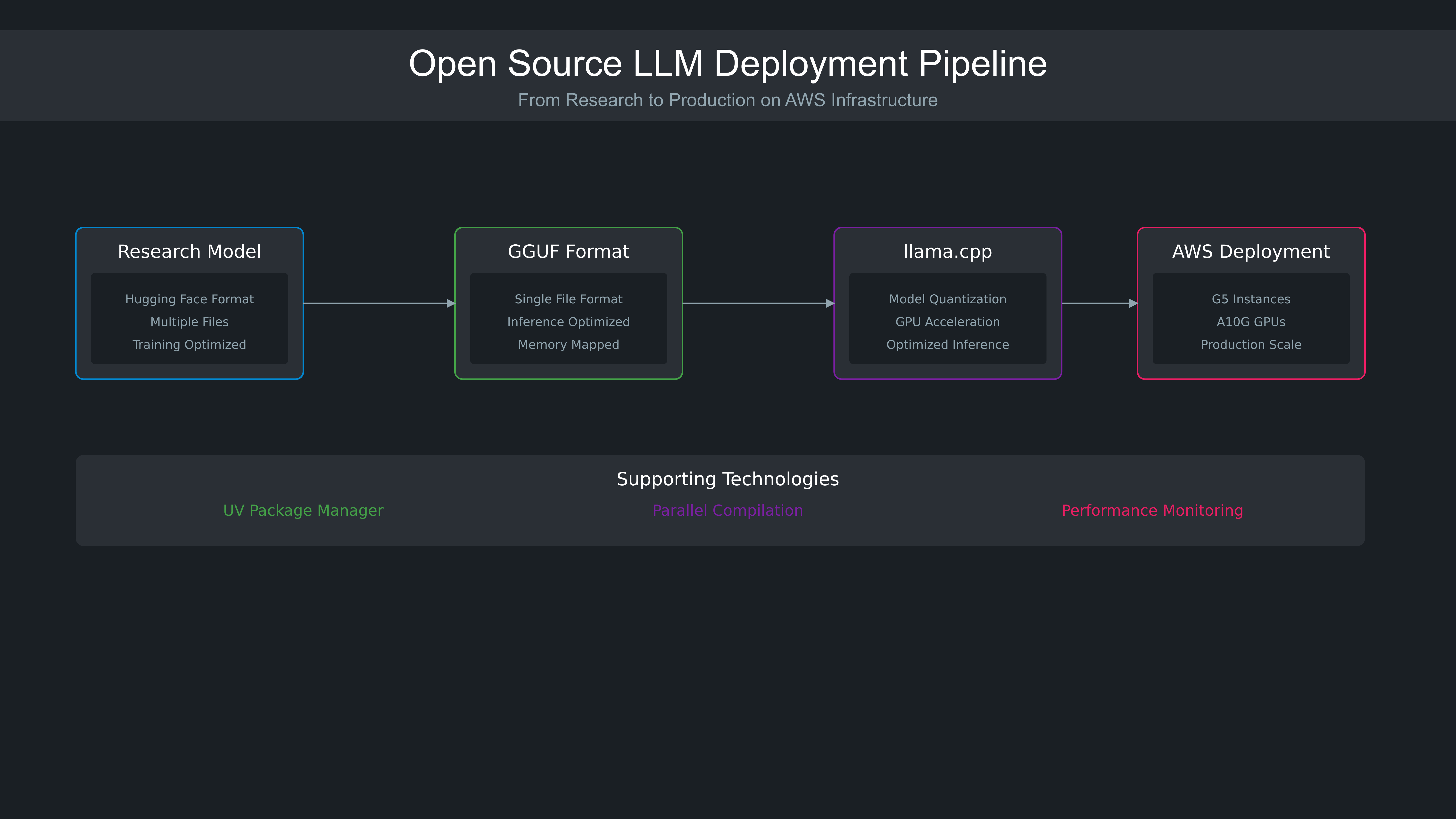

Core Technologies and Architecture

The Power of llama.cpp

The course delves deep into llama.cpp, a cornerstone technology for production LLM deployment. Key aspects covered include:

Optimized Compilation

- Architecture-specific optimizations using CUDA

- Parallel compilation optimization using Amdahl's Law

- Advanced GPU acceleration techniques

- Thread utilization and performance tuning

Model Quantization

- Converting 16-bit floats to 4-bit integers

- Achieving 98% performance retention with 70% size reduction

- Balance between model size and inference speed

- Memory mapping for efficient loading

GGUF Format: The Bridge Between Research and Production

The GGUF format serves as a crucial bridge between research models and production deployment. The course explains:

Format Benefits

- Single-file packaging of model weights, configuration, and tokenizer

- Standardized interface across different model types

- Memory-mapped loading for efficient resource utilization

- Cross-platform compatibility

Conversion Pipeline

- Converting from Hugging Face format to GGUF

- Optimizing for inference vs. training

- Managing model metadata and configuration

- Handling different quantization levels

UV: Revolutionizing Python Package Management

The course introduces UV, a Rust-based solution to Python's packaging challenges:

Technical Advantages

- Sub-millisecond package installation

- Automatic virtual environment management

- Deterministic dependency resolution

- Ephemeral environment creation

Practical Implementation

- Integration with existing Python projects

- Managing complex ML dependencies

- Reproducible environment creation

- Clean cache management

Production Deployment on AWS

Infrastructure Optimization

The course provides detailed insights into AWS deployment strategies:

Hardware Selection

- G5 12xlarge instance optimization

- Managing multiple A10G GPUs

- Storage configuration for large models

- Memory and CPU utilization patterns

Performance Tuning

- GPU layer optimization

- Thread management for inference

- Batch size configuration

- Memory mapping strategies

Practical Implementation Examples

Qwen Integration

- Model download and conversion

- Quantization optimization

- Chat interface implementation

- Performance monitoring

Production Considerations

- Load balancing strategies

- Error handling and reliability

- Monitoring and logging

- Resource scaling

Real-World Benefits and Applications

Technical Advantages

Performance Control

- Hardware-specific optimizations

- Custom quantization levels

- Inference speed tuning

- Resource utilization management

Deployment Flexibility

- On-premise deployment options

- Cloud provider independence

- Hybrid deployment strategies

- Custom infrastructure integration

Business Benefits

Cost Optimization

- Fixed infrastructure costs vs. per-token pricing

- Resource utilization efficiency

- Predictable scaling costs

- ROI optimization

Data Control

- Complete privacy preservation

- Regulatory compliance

- Custom data handling

- Audit capability

Course Structure and Learning Path

The comprehensive curriculum spans two weeks with 34 lessons, structured for both depth and practical application:

Week 1: Foundations and Core Technologies

- Introduction to open source LLM deployment

- Core technology stack implementation

- Parallel compilation and optimization

- GGUF format and model conversion

Week 2: Implementation and Production Deployment

- AWS infrastructure setup and optimization

- Model integration and fine-tuning

- Production deployment strategies

- Performance monitoring and optimization

Each module includes hands-on labs, quizzes, and practical exercises, culminating in a certification. The course material is derived from curriculum taught at Duke University and Northwestern, ensuring academic rigor while maintaining a strong focus on practical implementation.

Looking Ahead

As the AI landscape continues to evolve, the ability to deploy and manage open source LLMs will become increasingly crucial. This course provides the foundation for organizations to take control of their AI infrastructure, offering a path to independence from commercial API providers while maintaining production-grade performance and reliability.

Ready to master open source LLM deployment? Join over 500,000 learners and start your journey at https://ds500.paiml.com/code

Recommended Courses

Based on this article's content, here are some courses that might interest you:

-

AWS Advanced AI Engineering (1 week)

Production LLM architecture patterns using Rust, AWS, and Bedrock. -

Enterprise AI Operations with AWS (2 weeks)

Master enterprise AI operations with AWS services -

Natural Language AI with Bedrock (1 week)

Get started with Natural Language Processing using Amazon Bedrock in this introductory course focused on building basic NLP applications. Learn the fundamentals of text processing pipelines and how to leverage Bedrock's core features while following AWS best practices. -

Natural Language Processing with Amazon Bedrock (2 weeks)

Build production NLP systems with Amazon Bedrock -

Generative AI with AWS (4 weeks)

This GenAI course will guide you through everything you need to know to use generative AI on AWSn introduction on using Generative AI with AWS

Learn more at Pragmatic AI Labs